Microsoft Copilot Agent Policy Flaw Lets Any User Access AI Agents

Microsoft has disclosed a critical flaw in its Copilot agents’ governance framework that allows any authenticated user to access and interact with AI agents within an organization—bypassing intended policy controls and exposing sensitive operations to unauthorized actors.

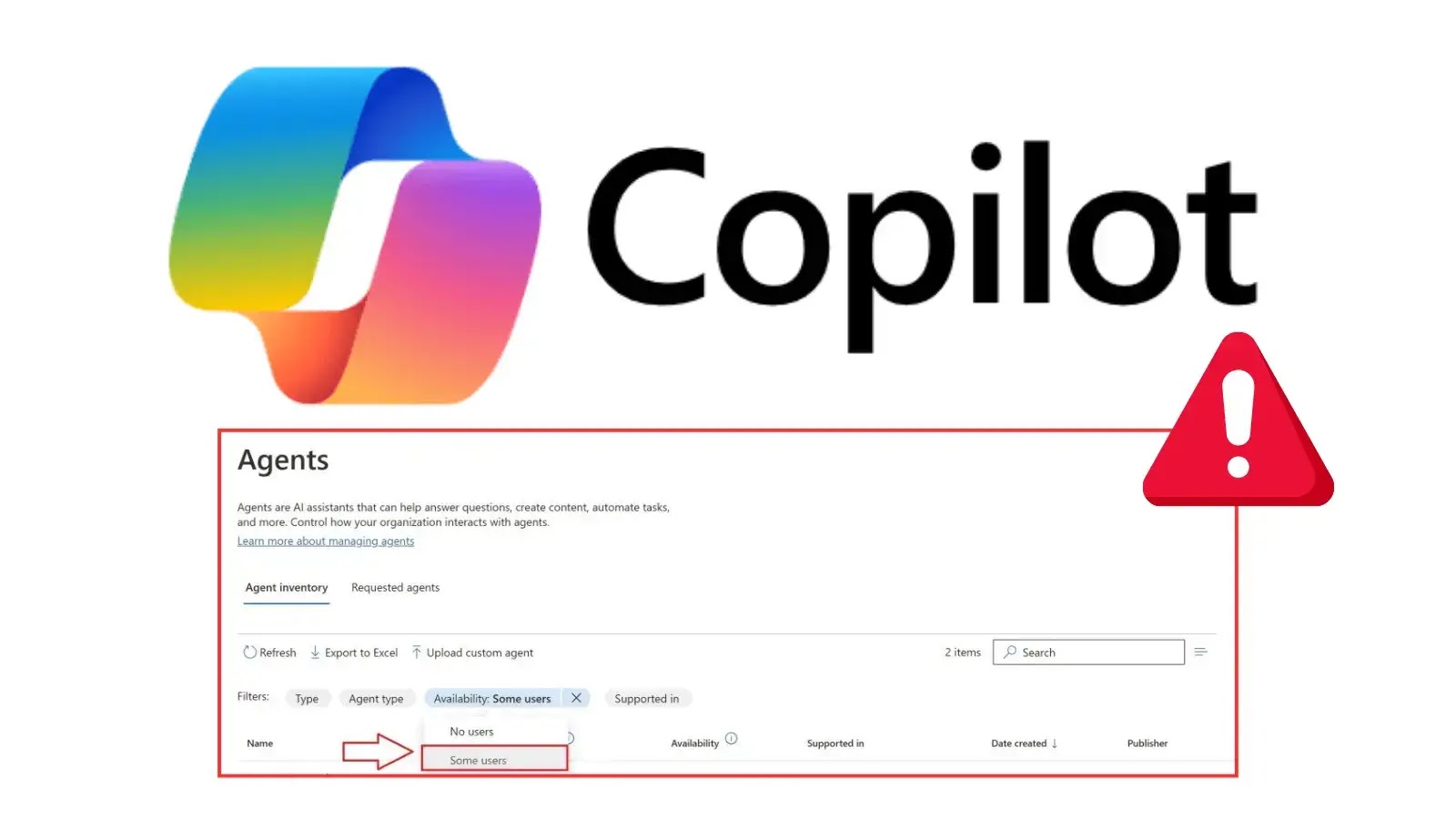

At the core of the issue is the way Copilot Agent Policies are enforced—or, more accurately, not enforced—when users enumerate and invoke AI agents.

An organization’s administrators define per-user or per-group policy rules dictating who can see and operate specific AI agents.

However, due to a lapse in policy evaluation, these controls are only applied to agents listed through the Microsoft 365 admin center’s management APIs, not to the broader Graph API endpoints that both GUI clients and script-based integrations use to discover and call agents.

In practice, this means that while the admin center will correctly hide or restrict agents based on policy, any user with basic access to Graph API calls—granted by default to all Microsoft 365 licenses—can retrieve the full roster of agents, including those tagged “private” or limited to privileged roles.

With a simple GET request against https://graph.microsoft.com/beta/ai/agents/, unauthorized users can gather agent identifiers, metadata, and endpoints. More troubling, they can then invoke those agents by posting prompts to their execution endpoints without being blocked by policy checks.

Microsoft’s own engineer who discovered and reported the flaw stated that policy gating in the admin portal is effectively window dressing: “We thought tenant administrators had exclusive visibility into their AI agents, but the enforcement plane in Graph was wide open.”

This gap not only undermines the zero-trust posture but also introduces significant security and compliance risks.

Sensitive automation workflows—spanning tasks like privileged credential rotation, data classification orchestration, or executive briefing generation—are suddenly within reach of every user in the tenant.

The vulnerability, tracked as CVE-2025-XXXX, carries a severity rating of 9.1 (Critical) on the CVSS 3.1 scale.

Microsoft verified the proof-of-concept exploit within 24 hours, issued a patched version of the policy enforcement middleware in August, and notified impacted customers via the Microsoft 365 Message Center.

Administrators must apply the August 2025 update to Copilot for Microsoft 365 and monitor their agents catalog for irregular access patterns.

Industry experts emphasize that while Microsoft moved swiftly to remediate the flaw, organizations should adopt compensating controls immediately. Recommendations include:

- Auditing Graph API permission scopes: restrict unnecessary access to AI-related endpoints.

- Implementing conditional access policies: require multi-factor authentication and device compliance for Graph API usage.

- Monitoring unusual agent invocation: set up SIEM alerts for high volumes of agent calls or out-of-hours access.

- Reviewing agent catalog: delete unused or deprecated AI agents to reduce the attack surface.

This incident underscores the growing pains of integrating AI automation into enterprise environments.

As AI agents become mission-critical, ensuring that governance and policy enforcement are airtight across all API layers is essential.

Even industry giants like Microsoft can overlook subtle policy planes, but rapid detection, transparent disclosure, and swift patching remain the gold standard for maintaining trust in AI-driven productivity tools.

Find this News Interesting! Follow us on Google News, LinkedIn, and X to Get Instant Updates!

Post Comment