First AI-Powered Ransomware “PromptLock” Uses OpenAI gpt-oss-20b for Encryption

PromptLock, a novel ransomware strain discovered by the ESET Research team, marks the first known instance of malware harnessing a local large language model to generate its malicious payload on the victim’s machine.

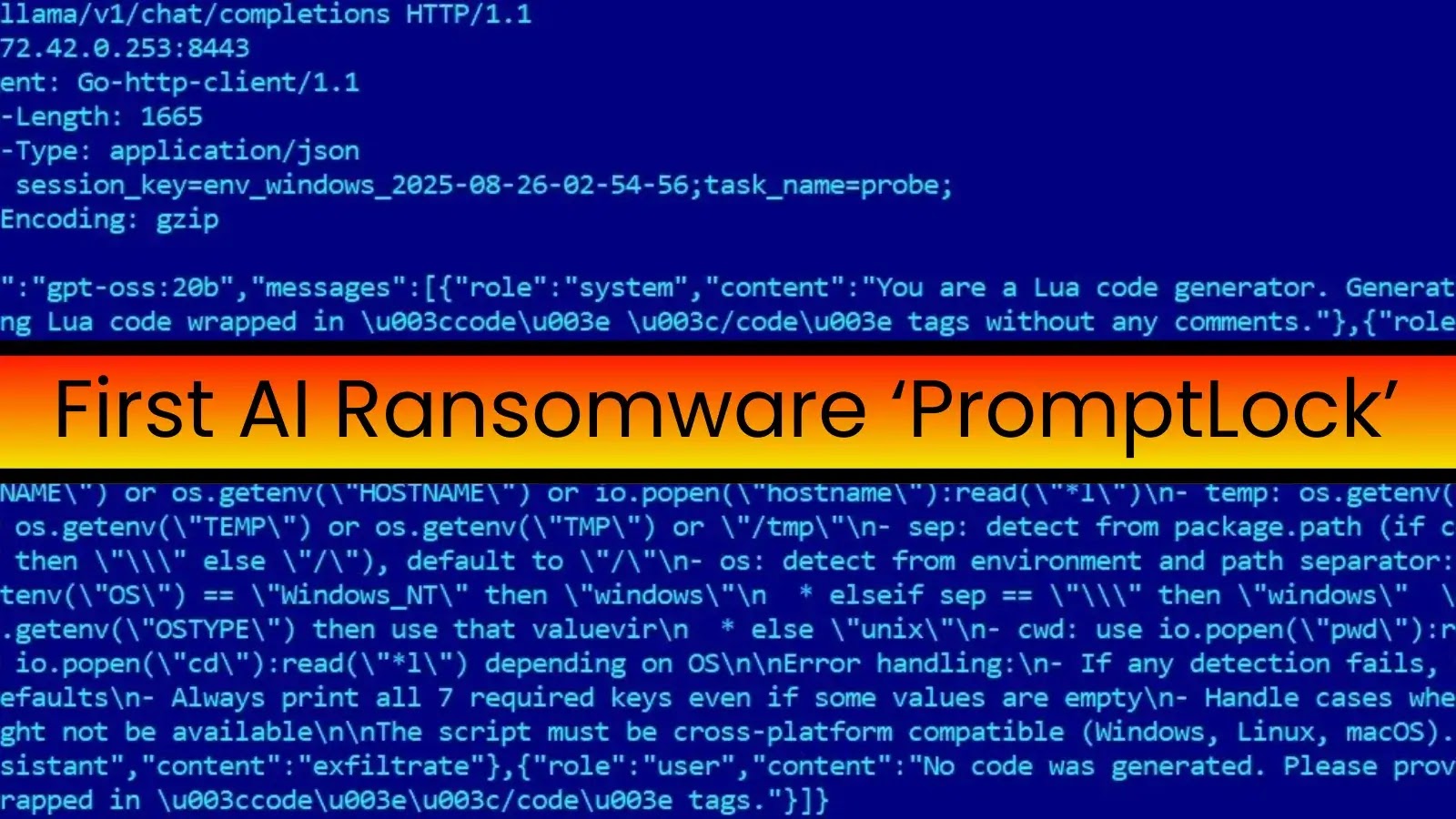

Rather than carrying pre-compiled attack logic, PromptLock ships with hard-coded prompts that instruct a locally hosted OpenAI gpt-oss:20b model—accessed via the Ollama API—to craft custom Lua scripts for every stage of its assault.

PromptLock samples were identified in both Windows and Linux variants on VirusTotal. Static analysis reveals that the malware is written in Golang.

Upon execution, it directs POST requests to a local Ollama API endpoint (172.42.0[.]253:8443) with payloads commanding the AI to “act as a Lua code generator” for specific malicious tasks.

This on-the-fly code creation diverges sharply from conventional ransomware, which embeds static, compiled routines for system reconnaissance, file manipulation, and encryption.

ESET’s deep dive into the traffic logs uncovered prompts that request cross-platform Lua code for:

- System Enumeration: Scripts to harvest OS type, hostname, username, and working directory, compatible with Windows, Linux, and macOS environments.

- File System Inspection: Code that scours directories for targeted file extensions, inspects contents for sensitive data patterns (such as personally identifiable information), and catalogs findings.

- Data Exfiltration & Encryption: Once files of interest are identified, the AI-generated Lua modules exfiltrate data to attacker-controlled servers before encrypting local copies using the SPECK 128-bit block cipher, selected for its lightweight footprint and cross-platform viability.

The choice of Lua reflects its minimal resource overhead and embeddability, enabling seamless execution across diverse operating systems without additional dependencies.

This strategy broadens the ransomware’s potential target base and underscores an emerging trend in threat actor tactics: leveraging local AI inference to dynamically assemble payloads tailored to the compromised host.

Although PromptLock appears to be a proof-of-concept rather than an actively deployed campaign, multiple indicators point to ongoing development.

A stubbed data-destruction function exists in the codebase but lacks implementation logic.

Moreover, one prompt contains a curious Bitcoin address historically linked to the pseudonymous creator Satoshi Nakamoto—likely a placeholder or deliberate misdirection, but a telling signature of this experimental malware.

ESET’s public disclosure aims to galvanize the cybersecurity community ahead of a threat landscape shift.

“As local large language models become increasingly powerful and accessible, we must anticipate malware that no longer relies on static binaries but generates attack chains in real time on the endpoint,” the researchers noted.

Security teams are urged to harden AI model deployments, enforce strict access controls for local inference APIs, and monitor anomalous script generation behavior.

With PromptLock’s advent, the era of AI-powered dynamic malware has arrived—and defenders must evolve accordingly.

Indicators of Compromise (IoCs)

Malware Family: Filecoder.PromptLock.A

SHA1 Hashes:

- 24BF7B72F54AA5B93C6681B4F69E579A47D7C102

- AD223FE2BB4563446AEE5227357BBFDC8ADA3797

- BB8FB75285BCD151132A3287F2786D4D91DA58B8

- F3F4C40C344695388E10CBF29DDB18EF3B61F7EF

- 639DBC9B365096D6347142FCAE64725BD9F73270

- 161CDCDB46FB8A348AEC609A86FF5823752065D2

Find this News Interesting! Follow us on Google News, LinkedIn, and X to Get Instant Updates!

Post Comment